Using The Big Lebowski to Understand Artificial Intelligence

A Collaboration with Ben Saltiel

AI does not start and stop at your convenience. —Walter Sobchak (probably)

Above: Good night, sweet humanity.

In his 1962 book, Profiles of the Future: An Inquiry into the Limits of the Possible, science fiction writer Arthur C. Clarke formulated his famous Three Laws.

The first two live in the shadow of the third, which reads, “Any sufficiently advanced technology is indistinguishable from magic.”

If you've interacted with Artificial Intelligence (AI) in any way, shape, or form, you've seen the magic firsthand.

As of this writing, OpenAI just released their new GPT-4o model which is, to use a technical term, bonkers. Check it out here and here and here.

That said, every magician has his secrets. Even AI.

I partnered with my good friend Ben Saltiel to peel back AI’s mathematical curtain by means of an unlikely guide: The Big Lebowski.

Before we get to The Dude, more on Ben.

Ben’s writing offers a blend of deep insight, biting sarcasm, and dry humor. He is an analyst’s analyst whose work is mutually exclusive, collectively exhaustive, sober, and statistically significant.

In his words:

Ben prides himself on being both a “Pareto Polymath” and a broke man’s Nassim Nicholas Taleb (with neither the temper nor the obsession picking fights with strangers on the internet). His work investing in and scaling startups complements his years of reading and reflection.

And now, over to Ben.

Since the public release of OpenAI’s ChatGPT, it’s impossible to open LinkedIn, X, or any other news outlet without seeing some mention of AI or Large Language Models (LLMs).

Technologists, politicians, and business leaders all agree that many facets of human life will change as this technology rapidly advances. Companies and individuals are increasingly using LLMs to accomplish tasks faster than they ever could before, which is leading to incredibly exciting—read as: terrifying—commercial, social, societal, and political ramifications.

Despite the hype, only a small percentage of people actually understand what an LLM is and how these models really work. Though far from an expert, I found that the best way to share what I do know about the topic is through the lens of the beloved 1998 film The Big Lebowski.

For those unlucky souls who have not yet seen it, the film’s tension revolves around a lack of context which leads to a case of mistaken identity. Prior knowledge of The Big Lebowski is unnecessary to read on but, as it is an all-time classic, I suggest you read the piece in full, reflect on how LLMs will impact/augment your work/life, and then give it a watch.

Data and Compression

Let’s start with some basics. The models that power AI are algorithms: mathematical representations designed to detect patterns learned by training on data sets. These data sets can contain all kinds of information in many different formats. They can come already tagged/labeled by humans, so that the model can see the correct input and output example in order to answer new but similar questions (i.e. Supervised Learning).

Or, the model can also be given lots of raw data and an objective to follow without explicit instructions on how to accomplish the task (i.e. Unsupervised Learning).

Hybrid models use both labeled and unlabeled data. In any case, the primary factors impacting a model's effectiveness is the quantity and quality of the data on which the model is trained. Even with fine-tuned parameters (i.e. the weights the model learns from the training data), without enough high quality data, any model will be useless.

LLMs power ChatGPT and other language-prompt interfaces. An LLM differs from traditional training models because of their size (some contain upwards of 70 billion parameters) and their design (which enables output based on conversational text prompts). LLMs are first trained on massive sets of internet data that are compressed into these many parameters. This forms the basis of the neural network that will try to predict the next word in a sequence.1

While this part is largely unsupervised, the models would not be very useful at this point. To help improve performance, ChatGPT, Gemini, and other leading companies have humans manually train and improve the models. This process is called fine tuning, which involves swapping out the compressed internet data in exchange for human-supervised responses.

Over time, if successful, the LLM should be able to provide accurate responses to increasingly difficult questions. This is the basic process that makes LLMs useful to layfolk.

Sounds simple right? Not so fast, there are a few more foundational concepts you need to understand before we get into The Big Lebowski. The first of these are word vectors.

Word Vectors and Association

A word vector represents words using a series of numbers. They appear as a set of coordinates (e.g. 0.0041, 0.1441, -0.9782, etc.) and the closer the association between two words, the closer the numbers will be. This differs from human language, which does not reveal anything about the relationship between words based on letters alone.

In English, two words that are closely associated are pizza and pasta. Though they start and finish with the same letters, you would not be able to determine any link between them. Otherwise logic would dictate a similar association from words such as panda, panorama, or propaganda.2

In LLMs, a word vector’s numbers are not static; they change as the model determines a closer association between two words (making the numbers closer together). Through this, LLMs can more easily predict the next word in a sentence based on the most commonly associated Word Vectors. As such, these vectors provide important information about the relationship between different words.

A complicating factor here is that words often have multiple meanings and interpretations depending on their context and use.

A word's denotation is its dictionary definition, whereas its connotation is its implication.

A word on its own will normally be interpreted somewhere close to its denotation. However the situation, grammar, or words used before or after, can create an entirely different meaning because of the connotation.

For example, if somebody called out “Dude!” one would interpret that this person is yelling at an unspecified man. If they said “The Bowling Lane is ready for The Dude and his friends,” one would realize that The Dude is a specific person’s nickname.

The variable nature of a word’s meaning because of its denotation and connotation is why context proves vital for humans and LLMs when trying to understand and execute requests.

While word vectors depict a relationship between words; on their own they have limited applications because they lack context. This lack of context drives most of the trouble The Dude encounters in the Big Lebowski. LLMs solve the missing context problem by using something called a Transformer.

Transformers

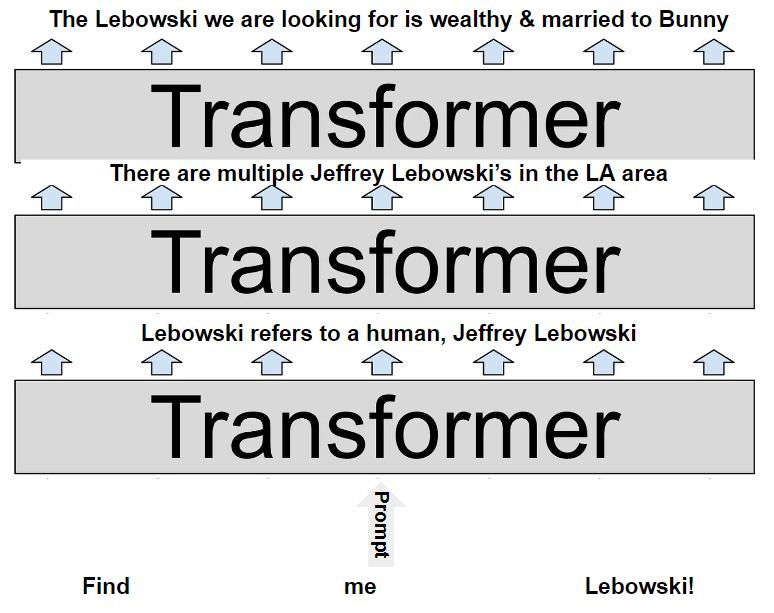

A transformer is a layer that adds information by extracting context present in a given data set to help clarify the meaning of words used in a prompt.

LLMs are built with many layers; the first few start by identifying the syntax of the sentence to iron out words with many meanings.

The outer layers increasingly build upon the understanding of the previous layers to understand precisely what a sentence is trying to say. The most recent LLM models now have tens of thousands of these transformers, which is why they can understand your prompts, even if you have typos, imperfect grammar, or incorrect sentence structure.

Now to The Big Lebowski.

The film’s protagonist is named Jeffrey Lebowski. He does not, however, go by this name; instead he prefers to be called “The Dude.” This is something not known to the many characters he encounters throughout the film, as they seek to blackmail a different Jeffrey Lebowski (The eponymous Big Lebowski), who they know as the wealthy husband of Bunny Lebowski.

Without giving too much away, The Dude lives a very quiet and laid-back lifestyle that largely involves bowling with his friends and drinking White Russians. He is not wealthy, just unemployed and behind on his rent.

One day, he comes home to find two thugs waiting in his apartment to collect money on behalf of their boss, noted bad guy Jackie Treehorn. The thugs say they are there to collect money from Lebowski and, as his name is Lebowski and his “wife” said he would be good for it, they demand some dollars.

The Dude immediately points out that his apartment does not look like a place a woman would live and he is not wearing a wedding ring. The thugs realize their mistake and leave angrily, The Dude sits bewildered, processing the fact that he watched a grown man pee on his carpet, while another stuffed his head down a toilet.

What happened was that Jackie Treehorn gave his thugs the instruction to “Collect the money Bunny owes me from her husband, Jeffrey Lebowski.”

If an LLM was processing this, the first few transformers would help identify that Jeffrey Lebowski was referring to a specific human person. It would have looked something like this:

Word Prompts (What is Jeffrey Lebowski?) —> Translate Words into Numerical Word Vectors (0.3541, -0.9141, .2141 etc.) —> Run sequence through a transformer to provide additional context (Jeffrey Lebowski is a proper noun).

Now that the thugs have established that Lebowski is a person and the rest of the prompt mandated they go retrieve money from said individual, all seemed simple. However, as the scene unfolded, this was clearly insufficient to accomplish Treehorn’s task.

With the help of transformers, their probability of success would have been higher if they realized:3

Lebowski is married to Bunny

Lebowski is very wealthy

There is more than one Jeffrey Lebowski in the Greater Los Angeles area

The “real” Big Lebowski is confined to a wheelchair

The Jeffrey Lebowski whose apartment they broke into goes by “The Dude”

With this information, they would not have confused The Dude with the Big Lebowski.

Now that the first few layers of transformers have clarified that the person the thugs need to ask for ransom is the wealthy Big Lebowski, there is the practical matter of how they go about doing so. Transformers need to take a given prompt and then execute a response; this happens in two steps.

First, the transformers evaluate the given words and look around for other words within the network that have relevant context to help it make sense of them. This is the attention step.

Next, each word evaluates the information gathered in the previous steps in order to try to predict the next word. This is the feed-forward step.

With the right data set and a properly fine-tuned model, simply prompting Lebowski instead of The Dude would have given the model the clue that the prompter was looking for the Big Lebowski.

This would have been figured out in the attention step, since the information that The Dude does not go by his legal name and is not wealthy would be available within the network. Even if the thugs could not access the information within the network, after receiving the instructions they could have realized that Treehorn meant the Big Lebowski (the feed-forward step).

Note that Treehorn said he wanted the money that Bunny owed him and ordered his thugs to get it from her husband. Nothing about the way The Dude lived demonstrated that he could afford ransom money. The thugs should have immediately realized somebody wealthy would live in a nicer house or with their significant other (Bunny). By paying attention to all the information they had been given and uncovered in the ramshackle apartment, they should have realized that they had the wrong guy.

This lack of context went both ways. The Dude did not have enough information at the start of the film to understand what was happening. His neural network did not know Jacky Treehorn, the other Lebowskis, Karl Hungus and the Nihilists, or their relationship with one another. He was able to acquire more information and learn after each interaction with these characters.

More, when The Dude learns from Maude Lebowski that her father does not have any money, he realizes that the Big Lebowski was trying to take advantage of the situation to embezzle money from his foundation. Maude fulfilled the role of transformer by helping The Dude make sense of the information, which eventually helped him realize what the Big Lebowski had done. The Dude’s process of acquiring information, learning, and adjusting based on newly acquired prompts and information very closely resembles that of an LLM.

The Data Abides

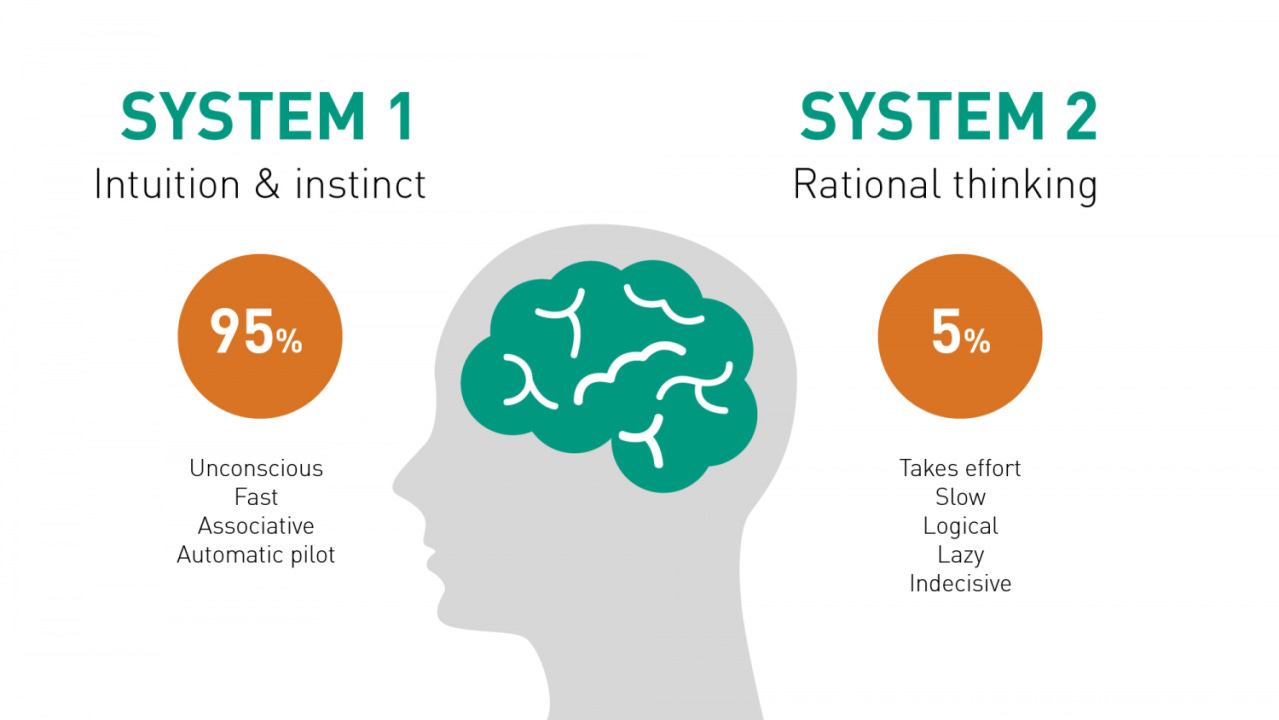

LLMs need much more data and context to be useful because—compared to humans—AI is still not that intelligent. Popularized by the late Daniel Kahneman, human thinking can be divided into two systems:

System 1 is quick, instinctive thinking that can be quickly retrieved and carried out within a few seconds.

System 2 is slower, more complex thinking that takes much more reflection.

System 2 can allow inferences based on information or context that might not be immediately evident. Humans have the luxury of being able to use both systems whereas LLMs can only use System 1. To compensate, these artificially intelligent models need incredible amounts of parameters, transformers, and other tools to make sense of things and provide useful results. Despite having incomplete information, Treehorn’s thugs did not take advantage of their System 2 thinking, which led to them to urinate on an innocent man’s rug.

To end, LLMs work by training on large data sets and receive fine tuning with the help of manual human intervention. By using word vectors and transformers, LLMs compensate for their lack of human intelligence via prompt identification and association. These models produce better, more sophisticated outputs because of the increasing number of parameters and transformers in each new version, giving them more information and context.

The Big Lebowski is the perfect film to show the difficulty machines (i.e. thugs) have accomplishing simple requests when missing relevant information and context.

Well, that’s just like, my opinion man.

What’s yours?

Per my about page, White Noise is a work of experimentation. I view it as a sort of thinking aloud, a stress testing of my nascent ideas. Through it, I hope to sharpen my opinions against the whetstone of other people’s feedback, commentary, and input.

If you want to discuss any of the ideas or musings mentioned above or have any books, papers, or links that you think would be interesting to share on a future edition of White Noise, please reach out to me by replying to this email or following me on Twitter X.

With sincere gratitude,

Tom

A neural network is simply the structure by which artificial intelligence teaches models to process data.

As far as I know, pizza and pasta are in no way linked to pandas, unless you have found yourself at a joint Panda Express and Domino’s late night drive-thru.

This assumes that this information is all available in their neural network.

Very glad we got to work on this one together!